Music & The Machines

· Music, Machines, Misfires · Tarantino’s Revenge · Iceland’s AI landscapes ·

We now know that tens of thousands of fully synthetic tracks arrive every single day to streaming services, slipping quietly into playlists or recommendations built for passive listening. Listeners can’t tell the difference. Platforms don’t seem in a hurry to help. And artists- across continents, genres, and generations- have spent a lot of 2025 waving red flags about erosion, consent, compensation. Many music fans have little idea, others are simply left shaking their heads in bafflement.

A very human industry is increasingly shaped by non-human volume. The Colour Bar tracked this drift: 2025 looks like the year ‘music’s next frontier’ became ‘music’s next flood.’

🎧 Prefer to listen? Hit play above to listen to me read this week’s dispatch.

Music & the Machines- AI isn’t just background noise anymore

▶️ Curated/Cuts: Iceland’s AI Landscapes & Tarantino’s Revenge via Fortnite

Warner x Netflix x Paramount: the deal, the drama, the HBO-shaped question mark

AI on The Dot: notes from a ‘neuomorphic’ creative salon

➕ ESPN in Asia? Squid Game America? Whither music videos?

Music, Machines, Misfires

Back in January I wrote that music streaming service Deezer launched a new AI detection tool, revealing their new tech had already discovered that roughly 10,000 ‘fully AI-generated tracks’ were being delivered to its platform every day. That amounted to about 10% of the daily content delivered to Deezer.

Some 10 months later, Deezer has said it now receives over 50,000 fully AI-generated tracks daily, or 34% of all tracks delivered to the streamer. Let that sink in. (Just in September, that number was 30,000.)

Note- Deezer remains the only platform to detect and and explicitly tag 100% AI-generated content.

This came in tow with results of a survey Deezer commissioned across eight countries with 9,000 participants (done by Ipsos). Some key findings:

97% of the respondents failed to distinguish between AI and human generated tracks.

45% of music streaming users would like to filter out 100% AI-generated music from their platform.

Maybe more hearteningly, 80% agree that 100% AI-generated music should be clearly labeled to listeners; and

73% of music streaming users would like to know if a music streaming service is recommending 100% AI-generated music.

Somehow, I am still having conversations whether gen-AI music is really a thing. Well, it became a thing, and is well on its way to becoming more things.

Its been quite the year for generative AI & music.

The conversation around AI and music stopped being abstract and started showing up in contracts, lawsuits, playlists… and charts. Across explorations in The Colour Bar, few themes were persistent: artists warning about erosion, tech companies insisting they’re “democratising creativity”, and platforms quietly hoping for not too many questions.

The tone was set early in the year, when I had to take a moment to absorb a stunning view from Suno AI’s CEO, Mikey Shulman.

“It’s not really enjoyable to make music now,” he said, before comparing his company’s ambitions to video games. As musicians across the world gagged, he said, “the majority of people don’t enjoy the majority of the time they spend making music.” In his ideal world, music should be something you “play” the way you half-play Fortnite while your friend chats in your ear. This, from a company with a “deep love and respect for music.” His message: music-making is tedious drudgery, let the machines handle it.

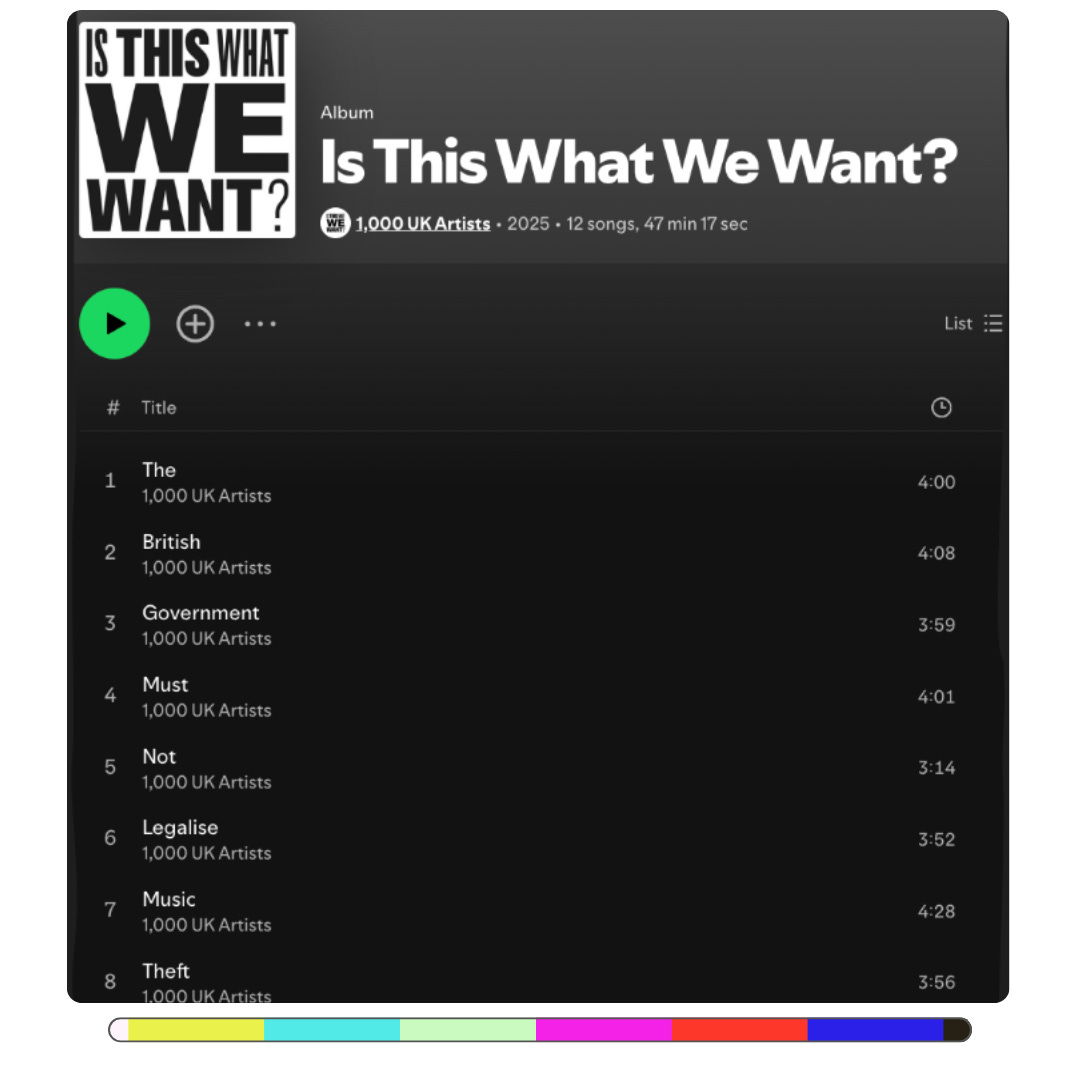

In February, over a thousand artists in the UK asked: what happens to a creative economy when you legalise unlicensed training? Their protest album, Is This What We Want?, was an album of silence, empty stages, unplayed instruments. A clever, devastating metaphor for what UK copyright proposals risked hollowing out, shared by the likes of Annie Lennox, Hans Zimmer, Kate Bush, Riz Ahmed and others. The matter remains unresolved, with voices across many creative fields continuing to push for what they believe is an issue of not just copyright, but the health of creative communities at large.

A different, welcome critique came from outside the Western bubble, where African voices showed how the same divide exists, about the place of AI. Concerns spanned predictable, Western-skewed biases baked into most large models, and the appropriation of music that could leave it shorn of its identity from the continent.

Synthetic Success.

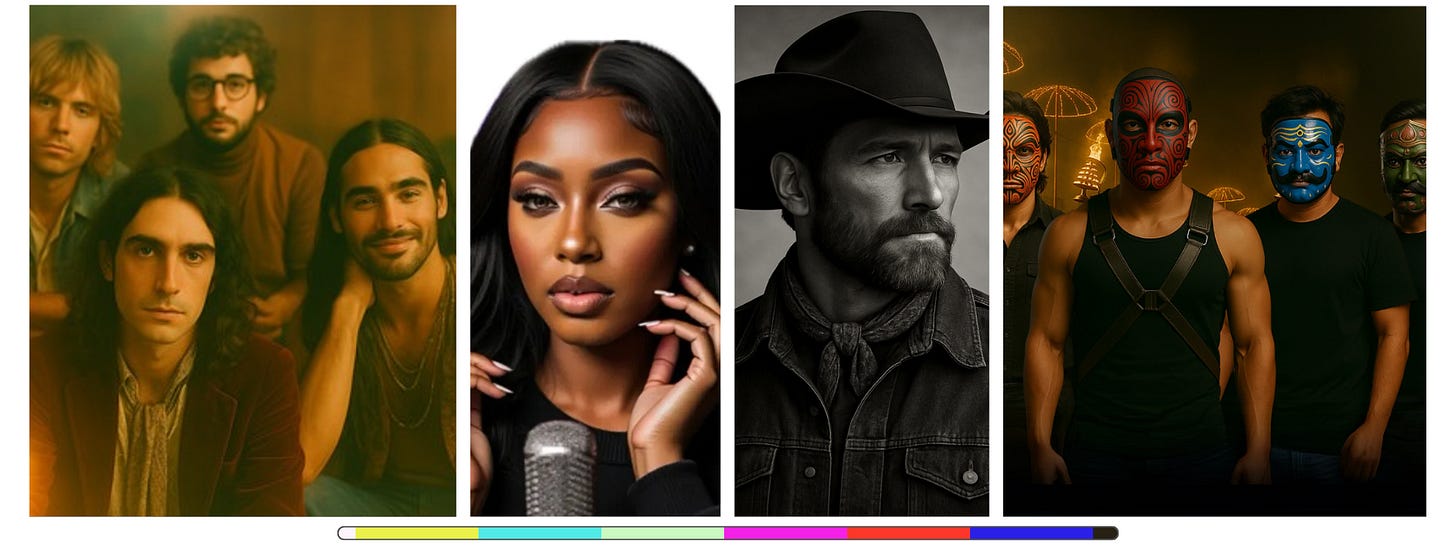

One of the stranger episodes of the year was the mysteriously popular folk-rock act that materialised on Spotify, hoovered up playlist placements, and cruised to over a million monthly listeners.

The Velvet Sundown had folk-rock aesthetics, rapid growth and a profile that didn’t quite add up. Of course, it was then called “a synthetic music project”, with a description cloaked in artistic statements about pushing the edges. There were more twists in the story too.

“What may now be the most successful AI group on Spotify is merely, profoundly, and disturbingly innocuous. In that sense, it signifies the fate of music that is streamed online and then imbibed while one drives, cooks, cleans, works, exercises, or does any other prosaic act.“ from The Atlantic ·

Close on its heels, over in India, there was Trilok, an “AI-generated Indian band that wants to pair spiritual tradition with contemporary rock.” Or, if you prefer, “India’s sonic past reassembled for a future audience”. Trilok later released a music video that looks like a me-too version of Glass Beams but- incredibly- featured real humans playing the physical versions of unreal digital personas that make up the band. Phew! In creating music of the kind that already exists in a region overflowing with talent, the question always is- who needs this, why is this being done?

The answer I’m afraid, seems just wrapped in one symbol. $ .

But the synthetic success continued. Xania Monet, an AI-generated R&B artist, signed a $3m deal. Xania is actually an output coming from the words of Talisha Jones, a Mississippi poet, who uses Suno to channel lyrics into a coherent persona. We also got Breaking Rust, an AI-generated country cowboy ‘artist’, whose song Walk My Walk rippled to a slightly obscure Billboard Chart (as did Xania).

Entertainment has ever been the art of reference and illusion… which is why artists’ concerns swirl around AI’s capacity not to replace their creativity but as a potential channel for their exploitation. Van Badham

My own instinctive recoil still stands: music is emotional resonance. A song carries- in whatever measure or shape- someone’s lived experience, or pain, or joy, or the weird in-between, or sometimes something much more trivial. But real. A song generated via an optimisation loop feels opportunistic, hollow… and utterly unnecessary in my life.

But if the tunes are kinda your thing, are you ok with them playing in your ears and your living room? Or does provenance matter? Wherever you might stand, these are undeniably all shadows of a new ecosystem, where being a human artist is no longer the default.

Platforms & Guardrails.

The thing is- a lot of music is consumed passively on streaming platforms, with playlists and suggestions leading the way. That’s where a lot of AI generated music finds it easy to slot in, arm in arm with the realities of ‘perfect-fit’ playlists and ‘ghost artist’ supply pipelines.

Spotify’s response to this chaos was…underwhelming? Their newly announced measures to deal with AI-generated content largely shift responsibility onto artists/providers to self-label their work.

There was the UMG–Udio saga: a lawsuit that morphed into a partnership with a very 2025 outcome- a walled-garden AI music platform. Udio can now access UMG’s catalog (only with artist opt-in), but users can only listen to their re-somethinged creations within that platform. No downloads. No exporting. Cue outrage from users whose worldview has been shaped by years of “the internet owes me free derivative content.”

Meanwhile, Warner Music has found a settlement with Suno (the other big labels are still in litigation), with the need for artists to opt-in. What that means for the existing training off artist music, I can’t tell.

Suno, for its part, represents the wider ‘content’ and ‘engagement’ play that much of tech & entertainment have become. This, from their investor deck, “Suno creates an entire Spotify catalog’s worth of music every two weeks”.

Its volume as virtue.

[AI] produces content at volumes and speeds no human can match, and — crucially — it produces content that people will accept as real enough to give their attention to. That’s the breakthrough: not that AI makes better “music,” but that it makes acceptable “music” at an unlimited scale. Jeffrey Anthony

Which brings us neatly back to Deezer’s numbers. 10,000 AI-generated tracks a day in January; 50,000 by October. A third of daily uploads. We don’t know those numbers for Spotify or Apple Music, but they’re unlikely to be vastly different. And most listeners can’t tell the difference. They’re not supposed to. Platforms continue to be quite happy with quantity over clarity, and many listeners will hum along obliviously.

80% of listeners want AI clearly labelled (note- clearly!) and 73% want to know when algorithms are pushing it at them. I hope this is representative of a wider desire for conscious listening. But it shows the real tension that 2025 seems to have forced into the open. We have splashed past “can machines make songs?”, and we are now swimming in the deep about who benefits, who gets erased, who gets compensated, and who gets inundated.

Maybe the questions are much simpler.

As many fans say, ”who asked for this?”, or “why do we need this?”

🎬 Curated/Cuts.

1. Iceland’s AI landscapes

Wait, what- Iceland is AI generated?

If it looks too good to be real, then it probably isn’t? In a time where the doubt and mistrust about pretty much anything we see online is getting into third gear, Iceland Air concurred with the social media quip, that the country might be entirely AI-generated.

Director: Reynir Lyngdal · Production: Republik

ECD: Emils Lukasevics · Agency: Kubbco

2. Tarantino’s Revenge

Tarantino’s Kill Bill appetites were never sated. Here is a trailer for “The Lost Chapter: Yuki’s Revenge”, an animated short that is part of the limited-run release of ‘Kill Bill The Whole Bloody Affair’.

The short is also out on Youtube, already. Starring Uma Thurman, it saw Tarantino work with Epic Games’ Unreal Engine and THE THIRD FLOOR Inc. to release the film in Fortnite.

Netflix x WarnerBros (x Paramount)

There is way more written about the Warner-Netflix-Paramount drama that any of us can reasonably read. But the saga of how Netflix pulled it off was laid out probably best in this insider account from Lucas Shaw. Which might well have a few more chapters added on by the time Ellison and their hostile takeover attempt is done.

The chat around which option is better for whom (fans, consumers, creatives, industry, employees) is a maze of if this then that. One thing I repeatedly wonder- what chance does the brilliant brand of HBO and its quality have to come through relatively unscathed? If you worry about the potential fallout for movies, this old piece lays right into Netflix , though there is ample evidence that any merger here is a net negative for the wider creative community and sector.

AI On The Dot

I dropped by AI on The Dot last week, a ‘cultural salon’ hosted by the same duo that has run the very popular AI on the Lot event in Los Angles for the past couple of years.

This was a small, curated attempt to splice Hollywood’s creative-AI mindset into the APAC conversation. I like that it was largely shorn of the breathlessness around AI that often marks such gatherings, though the optimistic energy around it remained palpable.

The ‘word of the evening’ was neuomorphic. Its Mike Gioia aka Intelligent Jello’s antonym of sorts to ‘skeuomorphic’, referring to how we can look at video creation coming from an AI powered world. Its been used well by Doug Shapiro’s essay, to try and move beyond looking at genAI as merely a new way to make movies & TV.

Themes orbited familiar territory- speed, scale, possibility- but the conversations (mostly!) had a grounded quality. Speed and its impact on storytelling- both the craft and numbers- was a recurrent theme; as was chat around smaller teams, sharper focus, traditional instincts. And, fascinatingly-about deeper integrations into workflows and pipelines.

Think along the lines of, ‘not so much “AI replaces production”, more “AI reveals who can actually tell a story when the tools stop being the bottleneck.’

Some nodding of heads about how we are / we need to move from “look, the model can generate amazing video” to “can it generate something that feels like intent?”

Notably (but unsurprisingly?) absent: any real discussion on ethics or model provenance. The only practical concern raised was indemnity- “please tell me my outputs won’t explode legally!”

With ambitions to make this is a larger, recurring event in the APAC calendar, I can see how AI on The Dot could grow into something meaningful- and hopefully will stay curious, lightly ambitious, not so much selling the future as poking & prodding at it.

➕ Quick hits

Disney+ and ESPN’s ambitions in Asia. Hmmm.

Some updates on the Squid Game America adaptation, confirmed to be helmed by David Fincher (with Cate Blanchett on board), starting filming early 2026.

As MTV fades away, a piece on the relevance of music videos.

My other Substack newsletter, Coffee & Conversations, has already waded into the end of year mood. Munch on a beloved snack and stroll through worthy reads scattered across the months- the Masala Peanuts of C&C fame.

If you enjoy reading The Colour Bar, maybe get me a coffee ☕ and help fuel the next one?

And do support by sharing pieces you like, or forwarding to others who might enjoy it.

Cover photo by Jabber Visuals on Unsplash